Node.js receives multiple requests from a client and places them into a queue NodeJS is built on the concept of event driven architecture. There is no limit to the process request; it can handle infinite loops & execute them.

But, it is possible to process multiple requests parallely using the NodeJS cluster module or worker_threads module.

Step 1: Create a NodeJS application and install the required Express.js module.

mkdir Project && cd Project

npm init -y

npm i expressStep 2: Create an index.js file on your root directory with the following code.

const express = require('express');

const cluster = require('cluster');

// Check the number of availiable CPU.

const numCPUs = require('os').cpus().length;

const app = express();

const PORT = 3000;

// For Master process

if (cluster.isMaster) {

console.log(`Master ${process.pid} is running`);

// Fork workers.

for (let i = 0; i < numCPUs; i++) {

cluster.fork();

}

// This event is firs when worker died

cluster.on('exit', (worker, code, signal) => {

console.log(`worker ${worker.process.pid} died`);

});

}

// For Worker

else{

// Workers can share any TCP connection

// In this case it is an HTTP server

app.listen(PORT, err =>{

err ?

console.log("Error in server setup") :

console.log(`Worker ${process.pid} started`);

});

}

Step 3: Run the index.js file using the following command.

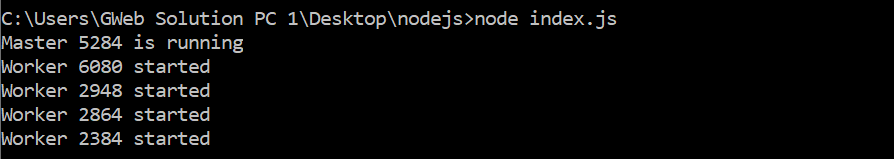

node index.jsI have 4 core CPU and 4 thread

Output: